We’ll use different terms for the components of our product:

- Platform server is the server that will run the Backend code responsible for the REST API.

- Arkindex needs to run some specific asynchronous tasks that require direct access to the database: the local worker will execute these tasks.

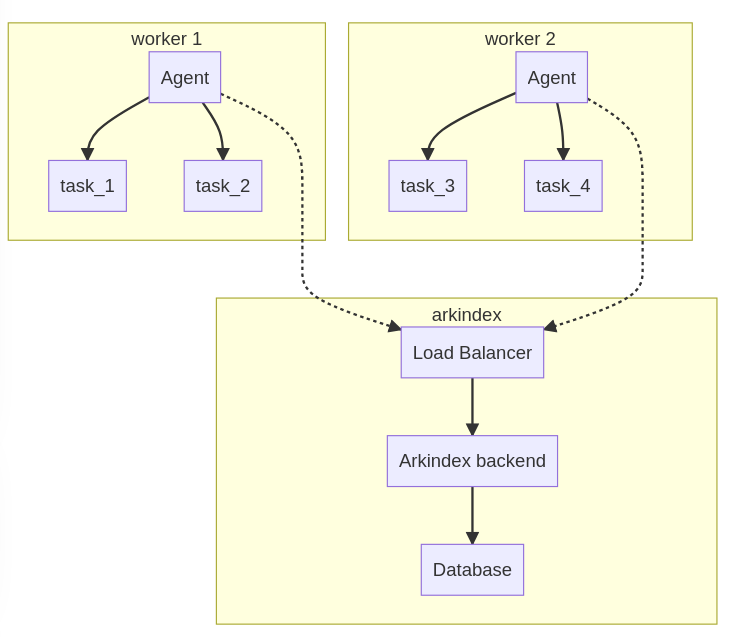

- In the Enterprise Edition, some intensive Machine Learning tasks will be executed by Remote workers, using a proprietary software called Ponos. One instance of Ponos is called an Agent.

Overview¶

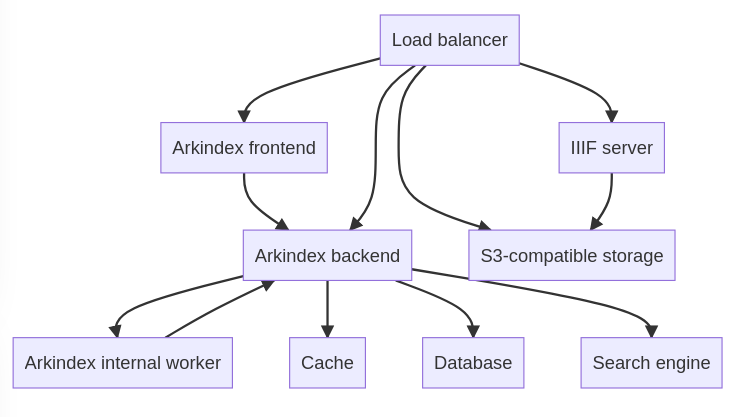

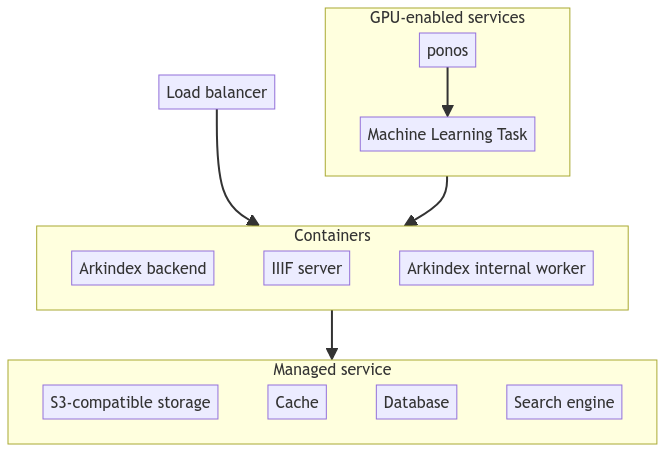

The main part of the architecture uses a set of open-source software along with our own software.

The open source components here are:

- Traefik as load balancer

- Cantaloupe as IIIF server

- MinIO as S3-compatible storage server

- Redis as cache

- PostgreSQL as database

- Solr as search engine

Machine Learning¶

In the Enterprise Edition, you’ll also need to run a set of workers on dedicated servers: this is where the Machine Learning processes will run.

Each worker in the diagram represents a dedicated server, running our in-house job scheduling agents and dedicated Machine Learning tasks.

Common cases¶

We only cover the most common cases here. If you have questions about your own architecture, please contact us.

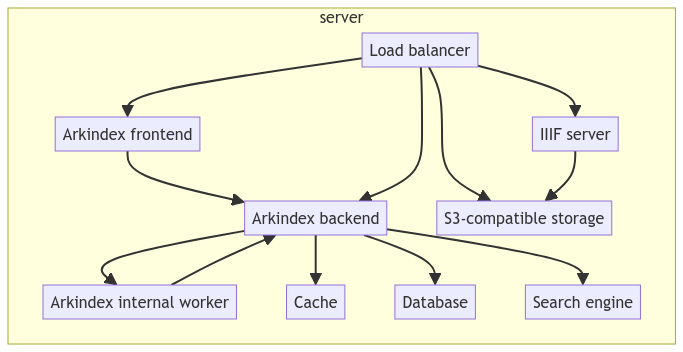

Single Server¶

This is the simplest option, a standalone server that hosts all the services using Docker containers.

A single docker-compose.yml can efficiently deploy the whole stack.

Pros¶

- Simple to deploy and maintain

- Cheap

Cons¶

- Limited disk space

- Limited performance

- Single point of failure

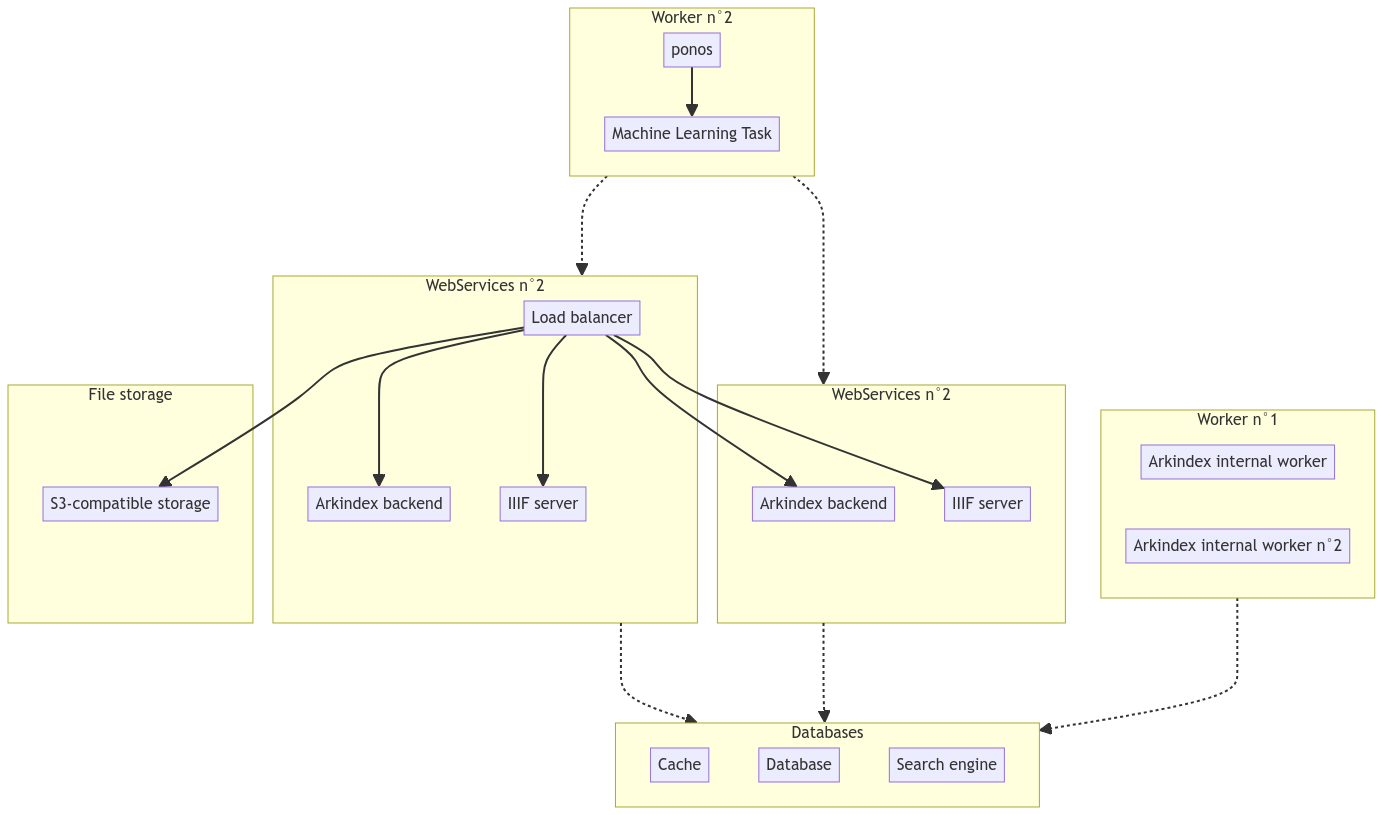

Cluster¶

With more budget, you can deploy Arkindex across several servers, still using Docker Compose along with placement constraints on Docker Swarm.

A Docker Swarm cluster enables you to run Docker services instead of containers, with multiple containers per service so you can benefit from higher throughput and eliminate single points of failure.

Pros¶

- High performance

- Services replica for high availability

- Network segregation for better security

Cons¶

- Limited disk space

- Harder to maintain and monitor

Cloud provider¶

You can also deploy Arkindex using a Cloud provider (like Amazon AWS, Google GCP, Microsoft Azure), using their managed services to replace self-hosting databases and shared S3-compatible storage.

Most cloud providers provide managed offers for the services required by Arkindex (Load balancer, PostgreSQL, S3-compatible storage, search engine & Redis cache). You’ll then need to run Arkindex containers:

- through managed Docker containers;

- by building your own Docker swarm cluster on their VPS offering.

Pros¶

- High performance

- Low maintenance for non-hosted services

- Unlimited disk space

Cons¶

- Expensive

- Vendor lock-in